Research

Generalized Planning

"Be wise, generalize!"

Planning is well known to be a hard problem. We are developing methods for acquiring useful knowledge while computing plans for small problem instances. This knowledge is then used to aid planning in larger, more difficult problems.

Often, our approaches can extract algorithmic, generalized plans that solve efficiently large classes of similar problems as well as problems with uncertainty in the quantities of objects that the agent needs to work with. The generalized plans we compute are easier to understand and are generated with proofs of correctness.

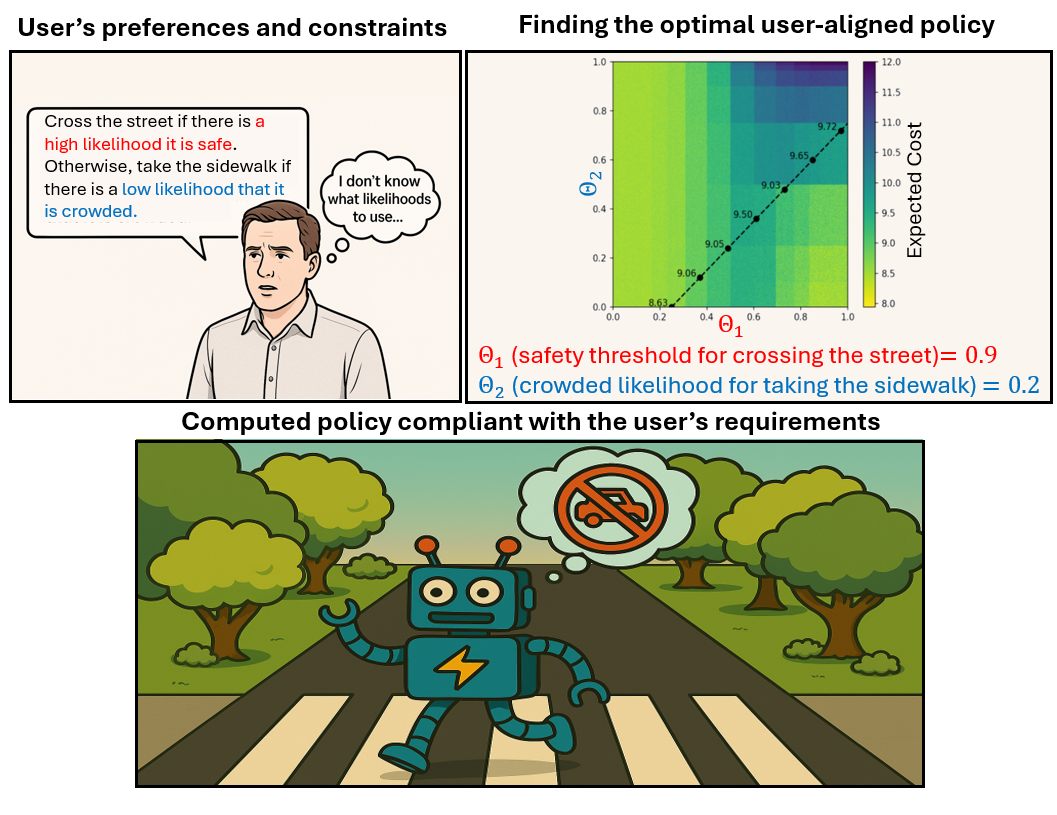

User-Aligned Planning in Partially Observable Environments

Can we align an AI system with users' expectations when it has limited, noisy information about the real world? How do we refine a user's high-level, imprecise requirements into an executable behavior for the agent?

We are developing novel frameworks that allow users to express preferences and constraints on agents' behavior in partially-observable settings. Early results show strong properties of convergence to the optimal user-aligned behavior.

JEDAI: An Educational System for AI Planning and Reasoning

The objective of this project is to introduce AI planning concepts using mobile manipulator robots. It uses a visual programming interface to make these concepts easier to grasp. Users can get the robot to accomplish desired tasks by dynamically populating puzzle shaped blocks encoding the robot's possible actions. This allows users to carry out navigation, planning and manipulation by connecting blocks instead of writing code. AI explanation techniques are used to inform a user if their plan to achieve a particular goal fails. This helps them better grasp the fundamentals of AI planning.

Get JEDAI

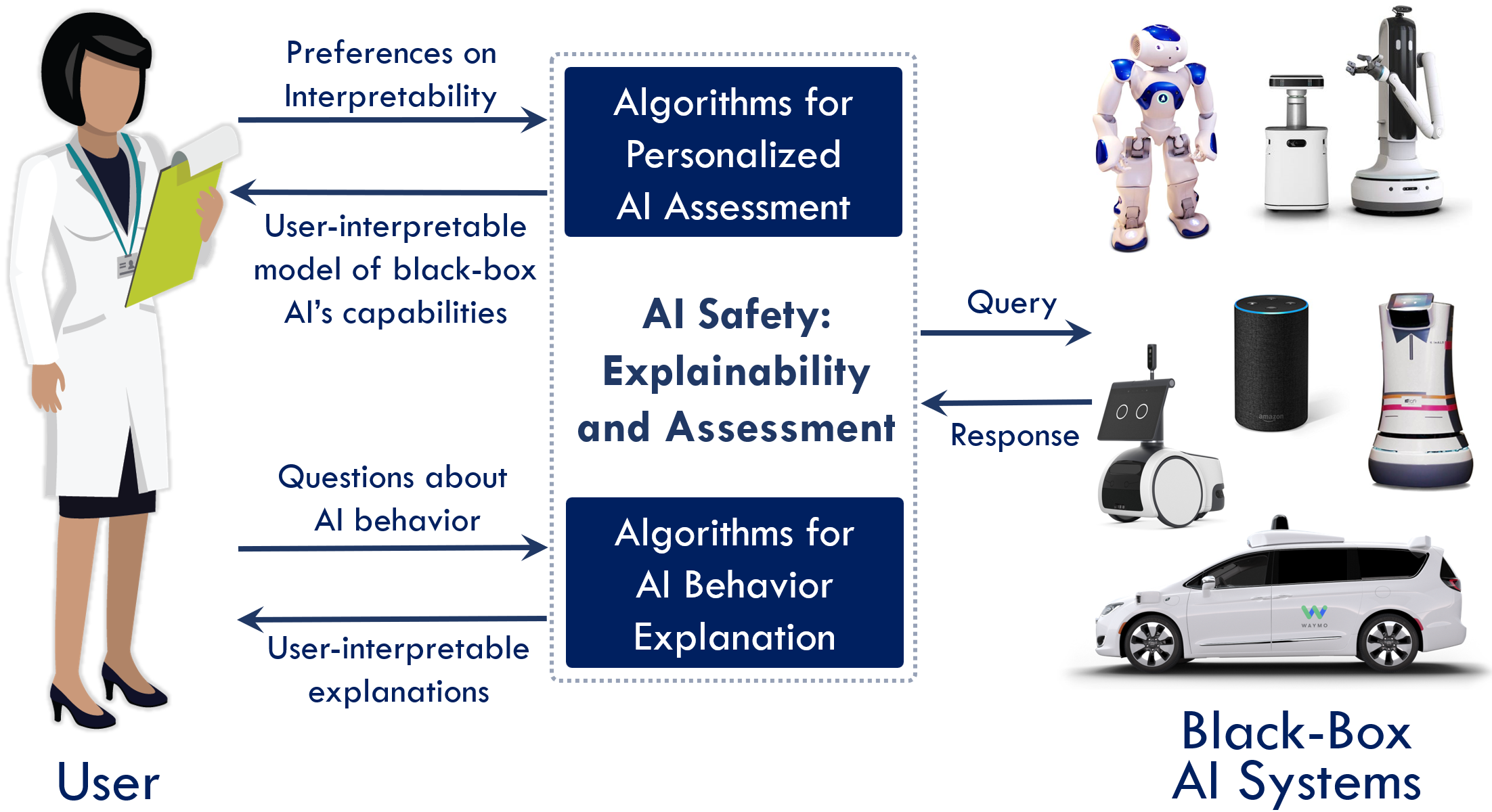

Autonomous Agents That Are Easy to Understand and Safe to Work With

How would a non-expert assess what their AI system can or can’t do safely? Today’s AI systems require experts to evaluate them, which limits the deployability and safe usability of AI systems.

We are developing approaches for autonomous, user-driven assessment of the capabilities of black-box taskable AI systems, even as the AI systems learn and adapt. These methods would enable users to continually evaluate and understand their AI systems in their own idiosyncratic deployments. They would prevent performance failures and accidents that can arise when AI systems are used beyond their dynamic envelopes of safe applicability. We are also designing approaches for computing user-aligned explanations of AI behavior. Together, these approaches improve the safety and usability of AI systems and enable autonomous, on-the-fly training paradigms for AI systems.

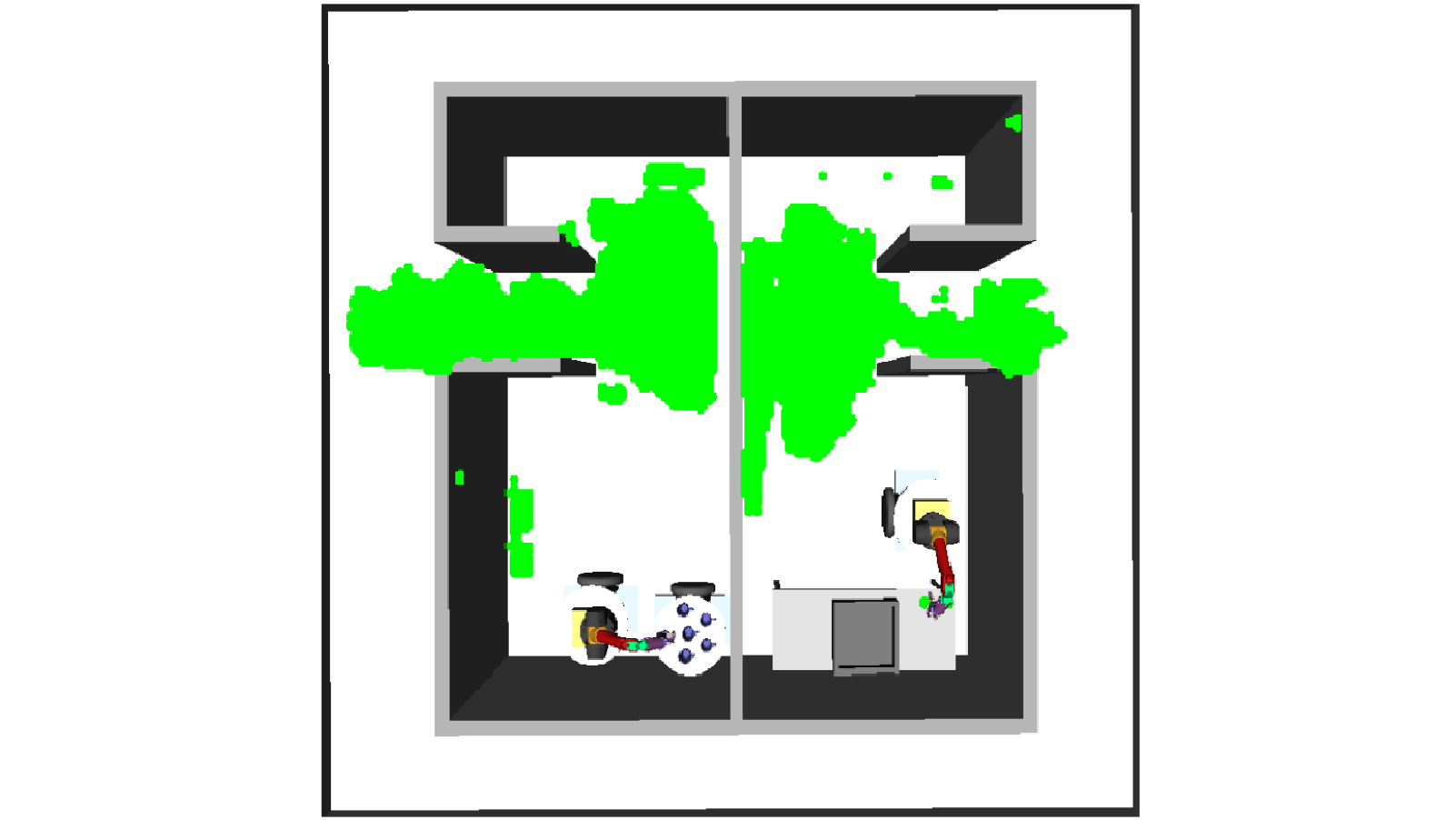

Synthesis and Analysis of Abstractions for Autonomy

In order to solve complex, long-horizon tasks such as doing the laundry, a robot needs to compute high-level strategies (e.g., would it be useful to put all the dirty clothes in a basket first?) as well as the joint movements that it should execute. Unfortunately, approaches for high-level planning rely on task-planning abstractions that are lossy and can produce “solutions” that have no feasible executions.

We are developing new methods for computing safe task-planning abstractions and for dynamically refining the task-planning abstraction to produce combined task and motion plans that are guaranteed to be executable. We are also working on utilizing abstractions in sequential decision making (SDM) for evaluating the effect of abstractions on models for SDM, as well as to search for abstractions that would aid in solving a given SDM problem.

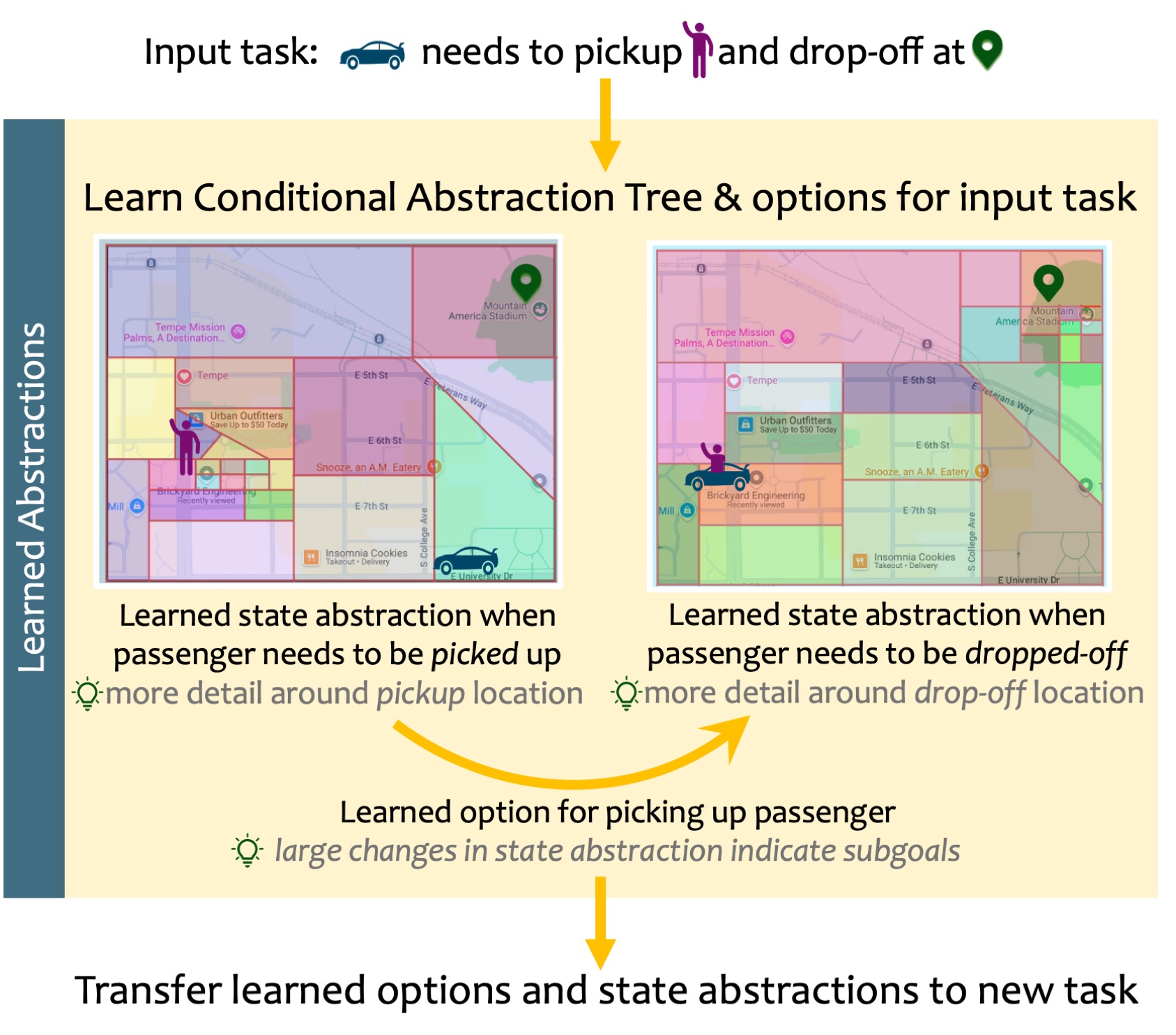

Context-Aware Abstractions for Generalization in RL

Reinforcement learning agents struggle to scale to complex, real-world problems due to sample inefficiency, limited interaction opportunities, and delayed feedback—particularly in long-horizon tasks with sparse rewards and non-stationary dynamics.

We are developing new abstraction algorithms that dynamically learn and utilize context-sensitive abstractions during RL. These methods also support autonomous option invention, and yield stronger cross-task transfer and generalizability.

News

We have extended the submission deadline for Generalization in Planning #GenPlan2025 workshop at #AAAI2025 to December 11th! Consider submitting your recent or ongoing work on generalization in sequential decision-making.

— Rashmeet Kaur Nayyar (@rashmeet_nayyar) November 30, 2024

More details at https://t.co/PktEANmIkG pic.twitter.com/RgEmB4Xsvc

I'll present our work "Epistemic Exploration for Generalizable Planning and Learning in Non-Stationary Settings" at #ICAPS2024 today.

— Pulkit Verma (@pulkit_verma) June 5, 2024

Joint Work w/ @RushangKaria, Alberto Speranzon, @sidsrivast

⏰ 01:45 PM MDT in Room KC 203 @ICAPSConference

Join the session to learn more. pic.twitter.com/YSGuwtgZxP

Congratulations to @pulkit_verma!! His dissertation helped kickstart the emerging area of user-driven assessment of AI systems that can learn and plan. https://t.co/hNBSyA0uZH

— Siddharth Srivastava (@sidsrivast) May 12, 2024

Congratulations to Dr. @shah__naman for a successful PhD defense and a great dissertation talk! 🥳🍾🎉

— Siddharth Srivastava (@sidsrivast) April 5, 2024

A special thanks to the committee, George Konidaris, @rao2z, Alberto Speranzon and Yu Zhang! pic.twitter.com/dr7Zi6Ykt7

Congratulations to Dr. @pulkit_verma for completing his dissertation with a fabulous presentation and defense today! 🍾🎇🥳

— Siddharth Srivastava (@sidsrivast) April 4, 2024

Here's a big thanks to the committee members: Nancy Cooke, Georgios Fainekos, and Yu Zhang for all their time and effort along the way! pic.twitter.com/0JxiXjFoEE

It’s a wrap! Check out this recap of the excellent #AAAI 2024 Spring Symposium, held last week at Stanford, facilitated by #SCAI Associate Professor @sidsrivast and his #PhD student @pulkit_verma. Thanks to all who attended and shared their work and perspectives.…

— ASU School of Computing and Augmented Intelligence (@SCAI_ASU) April 4, 2024

Congrats to #SCAI Associate Professor @sidsrivast who has been named a Scialog Fellow by @RCSA1.

— ASU School of Computing and Augmented Intelligence (@SCAI_ASU) March 14, 2024

As one of only approximately 50 new Fellows, Srivastava will work to develop innovative approaches and solutions to multidisciplinary problems.https://t.co/74FBkRQxea

How does logic arise from a real-valued real world?

— Siddharth Srivastava (@sidsrivast) February 22, 2024

Our new approach considers this in the context of robot planning, and invents predicates and logic-based models from raw robot trajectories without any annotations.

Joint w/ @shah__naman Jayesh Nagpal @pulkit_verma

🧵..1/4 pic.twitter.com/zpWekpfY4k

I'm at #NeurIPS2023 through Saturday. Let's meet up if you are interested in generalizable planning and learning or in AI assessment!

— Siddharth Srivastava (@sidsrivast) December 13, 2023

I'm also recruiting graduate students. Happy to talk about our ongoing projects and life @ASU if you are considering joining us!

Can we develop reliable AI systems for independent assessment of systems that can learn and plan?

— Siddharth Srivastava (@sidsrivast) December 13, 2023

Our #NeurIPS2023 work develops a new approach for #AIAssessment in stochastic settings:

Poster #1510 this evening!

Joint w/ @pulkit_verma @KariaRushang https://t.co/zk2zLDiU4Y pic.twitter.com/TOAJMxENk1

On a related note, we just heard that our AAAI Spring Symposium on AI Assessment has been accepted! Looking forward to productive discussions. Paper submissions welcome!

— Siddharth Srivastava (@sidsrivast) November 8, 2023

Details: https://t.co/JEqarTpXe2

Co-organizers: @pulkit_verma, @HazemTorfah, Rohan Chitnis, Georgios Fainekos https://t.co/i7tuB4M2Fp pic.twitter.com/1HidcK0wnY

It's good to see calls for AI assessment getting some traction! We need more research on reliable post-deployment assessment of systems that can learn and act. Some of our work has been addressing this emerging problem (https://t.co/GF6sPo5qmg under "AI assessment"). pic.twitter.com/AtuAK08lid

— Siddharth Srivastava (@sidsrivast) November 8, 2023

Had a great time discussing Planning and RL research during the #IJCAI2023 PRL workshop!

— Siddharth Srivastava (@sidsrivast) August 27, 2023

Thanks @harsha_kokel and the org team for putting together such a nice program (link below!) 🙏👏https://t.co/OrBefWEg13 https://t.co/mmWiIYO1KT

New Article: "Hierarchical Decompositions and Termination Analysis for Generalized Planning" by Srivastava https://t.co/6YwCTfCKxP

— J. AI Research-JAIR (@JAIR_Editor) August 1, 2023

Do consider attending this tutorial led by @shah__naman if you plan to be at #IJCAI2023 and are interested in using robots to solve complex tasks, or in long-horizon, neuro-symbolic planning and learning for hybrid (discrete + continuous) problems.

— Siddharth Srivastava (@sidsrivast) July 6, 2023

Details in original thread! https://t.co/D1tMII1Jnk

Learning abstractions for RL in non-image-based tasks is hard.

— Siddharth Srivastava (@sidsrivast) June 15, 2023

We were surprised to find that learning a conditional abstraction tree (CAT) while doing RL can improve sample efficiency to the point where vanilla Q-learning can outperform SOTA RL methods. #UAI2023

🧵.../1 pic.twitter.com/v1RroUtPZy

Happy to share a new class of algorithms for generalized planning: https://t.co/wOLOj2F8sH

— Siddharth Srivastava (@sidsrivast) December 8, 2022

The paper addresses a key problem in computing general plans/policies: will a given generalized plan terminate/reach good states? This is unsolvable in general due to the halting problem. pic.twitter.com/aUXn2MfBNi

Our #NeurIPS2022 paper develops a new approach for few-shot learning of generalized policy automata (GPA) for relational stochastic shortest path planning problems.

— Siddharth Srivastava (@sidsrivast) November 23, 2022

The learned GPAs can be used to transfer learning and accelerate SSP solvers on much larger problem instances! pic.twitter.com/dRp5sZNeps

We’d like AI systems to continually learn and adapt, but how would a user figure out what their black-box AI (BBAI) system can safely do at any point?

— Siddharth Srivastava (@sidsrivast) July 31, 2022

This is difficult especially when the user and the BBAI use different representations. Our #KR2022 work addresses this problem. pic.twitter.com/0hzhk7QNZ0

Reliable planning and learning in problems without image-based state representations remains challenging. In our #IJCAI2022 work we found that doing an abstraction before learning results in generalized Q functions and zero-shot transfer to much larger problems!

— Siddharth Srivastava (@sidsrivast) July 20, 2022

w/ @KariaRushang pic.twitter.com/ksFt7FWksv

Consider submitting your recent work on generalization/transfer in all forms of planning and sequential decision making! Due ~today, May 20th in NeurIPS or IJCAI format https://t.co/1DHcFEcFIt

— Siddharth Srivastava (@sidsrivast) May 20, 2022

Congratulations to @shah_naman, @pulkit_verma, Trevor Angle and the entire dev team for creating JEDAI and winning the Best Demo Award @aamas2022! 🎉

— Siddharth Srivastava (@sidsrivast) May 14, 2022

JEDAI (JEDAI explains decision-making AI) is an interactive learning tool for AI+robotics that provides explanations autonomously.

Task and motion planning (TAMP) captures the essence of what we want robots to be able to do in a range of settings. But cobots need to communicate with humans to avoid potential conflicts. Our #ICRA2022 work develops a unified framework for integrating communication with TAMP. pic.twitter.com/ObVDkWSZtZ

— Siddharth Srivastava (@sidsrivast) April 21, 2022

Consider submitting a piece about your work on representations for generalization and transfer in all forms of AI planning/sdm! Links below. https://t.co/ogoNFMzvQo

— Siddharth Srivastava (@sidsrivast) April 19, 2022

We use hand-coded state and action abstractions extensively in AI planning. Where do these abstractions come from? Our #aamas2022 paper develops methods for learning such abstractions from scratch and for using them to solve robot planning problems efficiently and reliably.🗝️🥡👇 pic.twitter.com/lp3U8rpK6R

— Siddharth Srivastava (@sidsrivast) March 5, 2022

Can we assess what Black-Box #AI systems can and can’t do reliably as they change/adapt to changing situations?

— Siddharth Srivastava (@sidsrivast) December 3, 2021

In our #AAAI2022 paper, @pulkit_verma and @rashmeet_nayyar (= contributors) address this with the foundations for efficient /differential/ assessment of AI systems. pic.twitter.com/5xmOmSS8XL

Excited to be a part of the workshop on Generalization in Planning (GenPlan) at IJCAI '21!

— Siddharth Srivastava (@sidsrivast) April 19, 2021

We welcome current/recent work on the synthesis or learning of plans and policies with an emphasis on generalizability and transfer.

Submission deadline: May 9.https://t.co/bfwxUGXXqm

Congratulations to @pulkit_verma and Rushang Karia for their first first-authored papers! Pulkit’s work investigates how users may assess the limits and capabilities of their AI systems while Rushang’s develops self-training algorithms for speeding up AI planning. #aaai2021 (1/2)

— Siddharth Srivastava (@sidsrivast) December 16, 2020

Imagine a robot doing your laundry or making a cup of tea for you. #ASUEngineering @CIDSEASU assistant professor @sidsrivast is working to equip #artificialintelligence with the capability to do real-world tasks. #AI https://t.co/RrcfGUP0wr

— ASU Ira A. Fulton Schools of Engineering (@ASUEngineering) August 11, 2020

Can we use #DeepLearning to speed up robot planning while maintaining theoretical guarantees (correctness, probabilistic completeness)? Dan and Kislay's work indicates YES, but with a few changes to planning algorithms. Check it out at https://t.co/tu7TSRcwBT and @icra20 online! pic.twitter.com/fQQ13qyzwJ

— Siddharth Srivastava (@sidsrivast) May 31, 2020

Congratulations to Rashmeet for winning the Chambliss Medal for her research project on using #AI for reliable inference about intergalactic space! @asunow article featuring this interdisciplinary #ASUEngineering work: https://t.co/bkMLSxT5Wo

— Siddharth Srivastava (@sidsrivast) May 27, 2020

Our new “anytime” algorithm for stochastic task and motion planning computes better robot policies as it gets more time. Our YuMi uses this to autonomously build Keva structures! @icra2020

— Siddharth Srivastava (@sidsrivast) March 20, 2020

Personal favorite: https://t.co/BPti3sJp1E

Paper + videos: https://t.co/lsDf7So4gl pic.twitter.com/zWLCZlL71h

Congrats to Daniel, Naman, Kislay, Deepak & Pranav for their first papers, accepted at #ICRA2020! Their #NSF_CISE funded #AI research shows how to compute reliable robot plans more efficiently using (1)abstractions & (2)#DeepLearning. Camera-readies coming soon! #ICRA #NSFfunded

— Siddharth Srivastava (@sidsrivast) January 23, 2020

How can we train people to use adaptive #AI systems, whose behavior and functionality is expected to change from day to day? Our approach: make the AI system self-explaining! #ASUFoW https://t.co/VwFs30sJU2

— Siddharth Srivastava (@sidsrivast) September 14, 2019

Had a great conversation about #AI with Rami Kalla @pointintimes!

— Siddharth Srivastava (@sidsrivast) August 7, 2019

Audio podcast: https://t.co/xYeMtJHhzd

Video linked in the original post. https://t.co/swC01xIhii

As anyone who has talked to a 3yo knows, explaining why something can’t be done can be much harder than explaining a solution. Can #AI systems explain why they failed to solve a given problem? Sarath's new work takes first steps in explaining unsolvability https://t.co/lQuRSeKc4b

— Siddharth Srivastava (@sidsrivast) March 27, 2019

Dan and Kislay's new work on motion planning tries to get the best of both worlds: the learn and link planner (LLP) learns and improves with experience. It is also sound and probabilistically complete. Check out the results here: https://t.co/v21OfXJgtB

— Siddharth Srivastava (@sidsrivast) March 11, 2019

Our new work aims at allowing designers and users to choose whether their AI systems clarify, or protect information.

— AAIR Lab (@AAIRLabASU) February 5, 2019

A Unified Framework for Planning in Adversarial and Cooperative Environments. Kulkarni et al. https://t.co/7ibzWE7KwC#AAAI2019 https://t.co/BQZW0umryL

Alfred says Hello!! https://t.co/08H96AoUMq

— AAIR Lab (@AAIRLabASU) January 29, 2019