AI systems are increasingly interacting with users who are not experts in AI. This has led to growing calls for better safety assessment and regulation of AI systems. However, broad questions remain on the processes and technical approaches that would be required to conceptualize, express, manage, and enforce such regulations for adaptive AI systems, which by nature, are expected to exhibit different behaviors while adapting to evolving user requirements and deployment environments.

This symposim will foster research and development of new paradigms for assessment and design of AI systems that are not only efficient according to a task-based performance measure, but also safe to use by diverse groups of users and compliant with the relevant regulatory frameworks. It will highlight and engender research on new paradigms and algorithms for assessing AI systems' compliance with a variety of evolving safety and regulatory requirements, along with methods for expressing such requirements.

We also expect that the symposium will lead to a productive exchange of ideas across two highly active fields of research, viz., AI and formal methods. The organization team includes active researchers from both fields and our pool of invited speakers features prominent researchers from both areas.

Please feel free to send workshop related queries at: aia2024.symposium@gmail.com.Although there is a growing need for independent assessment and regulation of AI systems, broad questions remain on the processes and technical approaches that would be required to conceptualize, express, manage, assess, and enforce such regulations for adaptive AI systems.

This symposium addresses research gaps in assessing the compliance of adaptive AI systems (systems capable of planning/learning) in the presence of post-deployment changes in requirements, in user-specific objectives, in deployment environments, and in the AI systems themselves.

These research problems go beyond the classical notions of verification and validation, where operational requirements and system specifications are available a priori. In contrast, adaptive AI systems such as household robots are expected to be designed to adapt to day-to-day changes in the requirements (which can be user-provided), environments, and as a result of system updates and learning. The symposium will feature invited talks by researchers from AI and formal methods, as well as talks on contributed papers.

We welcome submissions on topics such as:

Submissions will take the form of extended abstracts with a first author who will be the speaker, and each speaker cannot have more than one submission as first author. Each submission must be 1-2 pages long, excluding references (in the AAAI 2024 style available here), and may refer to joint work with other collaborators. Co-authored papers should list the expected speaker as the first author of the paper.

Submissions can be a summary/survey of recent results, new research, or a position paper. There are no formal proceedings, and we encourage submissions of work presented or submitted elsewhere (no copyright transfer is required, only permission to post the abstract on the symposium site).

Papers can be submitted via EasyChair at https://easychair.org/my/conference?conf=aia2024.

| Submission deadline | |

| Author notification | |

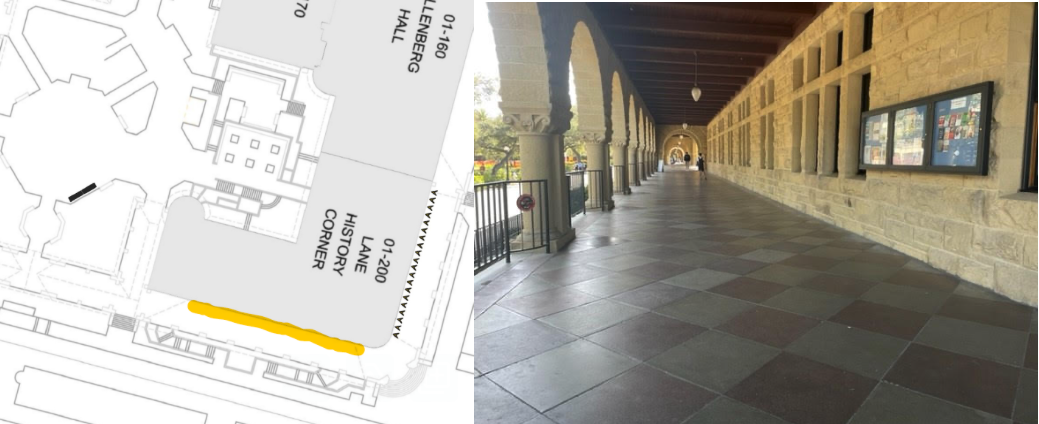

| Symposium | March 25-27, 2024 |

Kamalika ChaudhuriUniversity of California San Diego, USA and Meta AI |

Privacy in Foundation Models: Measurement and MitigationLarge foundation models that do representation learning have shown enormous promise in transforming AI. However, a major barrier to their widespread adoption is that many of the larger models are trained on very large datasets curated from the internet which might inadvertently contain sensitive information; this can lead to privacy concerns if the model memorizes this information from its training data.In this talk, I will talk about some of our recent research in measuring memorization in large learning models as well as building models with rigorous privacy guarantees. For measurement, we propose a new metric called "deja vu memorization" for image representation learning models, and show that this kind of memorization can be mitigated with suitable choices of hyperparameters. For representation learning with rigorous privacy guarantees, we develop ViP -- the first foundation model for computer vision with differential privacy, one of the gold standards in private data analysis in recent years, and evaluate its privacy-utility tradeoff. Joint work with Florian Bordes, Chuan Guo, Casey Meehan, Maziar Sanjabi, Pascal Vincent and Yaodong Yu. Bio: Kamalika Chaudhuri is a Professor at the University of California, San Diego and a Research Scientist at FAIR in Meta. She received a Bachelor of Technology degree in Computer Science and Engineering in 2002 from Indian Institute of Technology, Kanpur, and a PhD in Computer Science from University of California at Berkeley in 2007. She received an NSF CAREER Award in 2013 and a Hellman Faculty Fellowship in 2012. She has served as the program co-chair for AISTATS 2019 and ICML 2019, and as the General Chair for ICML 2022. Kamalika’s research interests lie in trustworthy machine learning – or machine learning beyond accuracy, which includes problems such as learning from sensitive data while preserving privacy, learning under sampling bias, in the presence of an adversary, and from off-distribution data. |

Sriraam NatarajanUniversity of Texas Dallas, USA |

Human-allied Assessment of AI Systems: A Two-step ApproachHistorically, Artificial Intelligence has taken a symbolic route for representing and reasoning about objects at a higher-level or a statistical route for learning complex models from large data. To achieve true AI, it is necessary to make these different paths meet and enable seamless human interaction. I argue that the first step in this direction is to obtain rich human inputs beyond labels (to improve trust and robustness). To this effect, I will present the recent progress that allows for more reasonable human interaction where the human input is taken as "advice" and the learning algorithms combine the advice with data. Next, I argue that we need to "close-the-loop" where information is solicited from humans as needed by explaining the models in a naturally interpretable manner. As I show empirically, this allows for seamless interactions with the human expert.Bio: Sriraam Natarajan is a Professor and the Director for Center for ML at the Department of Computer Science at University of Texas Dallas, a hessian.AI fellow at TU Darmstadt and a RBDSCAII Distinguished Faculty Fellow at IIT Madras. His research interests lie in the field of Artificial Intelligence, with emphasis on Machine Learning, Statistical Relational Learning and AI, Reinforcement Learning, Graphical Models and Biomedical Applications. He is a AAAI senior member and has received the Young Investigator award from US Army Research Office, industry awards, ECSS Graduate teaching award from UTD and the IU trustees Teaching Award from Indiana University. He is the program chair of AAAI 2024, and the general chair of ACM CoDS-COMAD 2024. He was the specialty chief editor of Frontiers in ML and AI journal, and is an associate editor of JAIR, DAMI and Big Data journals. |

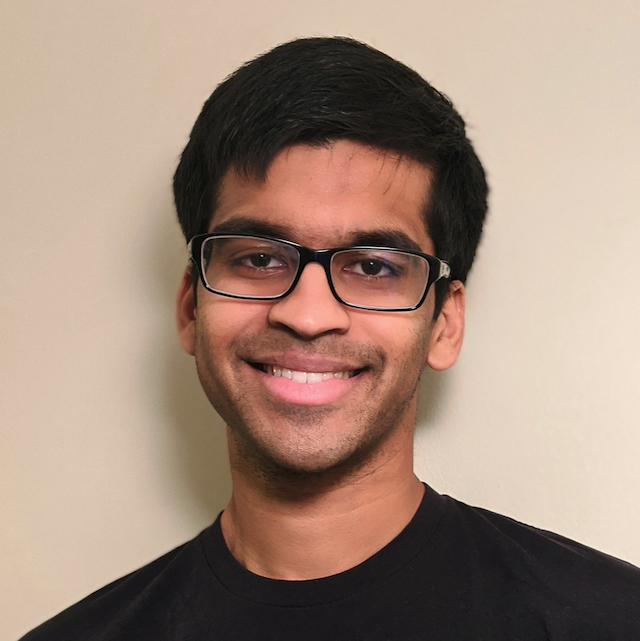

Sriram SankaranarayananUniversity of Colorado Boulder, USA |

Predicting Autonomous Agent BehaviorsHow do we predict what an agent will do next? This question is central to problems in assured autonomy such as runtime monitoring, and safety enforcement. Numerous machine learning techniques exist to provide answers to such questions. However, these approaches often fail to incorporate background information such as information about the specific intents/goals of the agents. Furthermore, as the time horizon becomes longer, the predictions tend to become less reliable. We first show how incorporating the intents in the form of temporal logic specifications yields more accurate predictions of future behaviors for a longer time horizon. Next, we will show a framework that can enable us to plan against possible future actions of an agent. We will demonstrate applications to interesting types of tasks that agents tend to perform ranging from rock-paper-scissors to cataract surgery and furniture assembly.Bio: Sriram Sankaranarayanan is a professor of Computer Science at the University of Colorado, Boulder. His research interests include automatic techniques for reasoning about the behavior of computer and cyber-physical systems. Sriram obtained a PhD in 2005 from Stanford University where he was advised by Zohar Manna and Henny Sipma. Subsequently he worked as a research staff member at NEC research labs in Princeton, NJ. He has been on the faculty at CU Boulder since 2009. Sriram has been awarded the prestigious Innovation Award from Coursera for his online “Data Structures and Algorithms” specialization, which has taught the fundamentals of data structures and algorithms with an emphasis on applications in data science to more than 10,000 students. |

Sanjit A. SeshiaUniversity of California Berkeley, USA |

Towards a Design Flow for Verified AI-Based AutonomyVerified artificial intelligence (AI) is the goal of designing AI-based systems that have strong, ideally provable, assurances of correctness with respect to formally specified requirements. This talk will review the main challenges to achieving Verified AI, and the initial progress the research community has made towards this goal. A particular focus will be on AI-based autonomous and semi-autonomous cyber-physical systems (CPS), and on the role of environment/world modeling on user-aligned assessment of AI Safety. Building on the initial progress, there is a need to develop a new generation of design automation techniques, rooted in formal methods, to enable and support the routine development of high assurance AI-based autonomy. I will describe our work on formal methods for Verified AI-based autonomy, implemented in the open-source Scenic and VerifAI toolkits. The use of these tools will be demonstrated in industrial case studies involving deep learning-based autonomy in ground and air vehicles.Bio: Sanjit A. Seshia is the Cadence Founders Chair Professor in the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley. He received an M.S. and Ph.D. in Computer Science from Carnegie Mellon University, and a B.Tech. in Computer Science and Engineering from the Indian Institute of Technology, Bombay. His research interests are in formal methods for dependable and secure computing, spanning the areas of cyber-physical systems (CPS), computer security, distributed systems, artificial intelligence (AI), machine learning, and robotics. He has made pioneering contributions to the areas of satisfiability modulo theories (SMT), SMT-based verification, and inductive program synthesis. He is co-author of a widely-used textbook on embedded, cyber-physical systems and has led the development of technologies for cyber-physical systems education based on formal methods. His awards and honors include a Presidential Early Career Award for Scientists and Engineers (PECASE), an Alfred P. Sloan Research Fellowship, the Frederick Emmons Terman Award for contributions to electrical engineering and computer science education, the Donald O. Pederson Best Paper Award for the IEEE Transactions on CAD, the IEEE Technical Committee on Cyber-Physical Systems (TCCPS) Mid-Career Award, the Computer-Aided Verification (CAV) Award for pioneering contributions to the foundations of SMT solving, and the Distinguished Alumnus Award from IIT Bombay. He is a Fellow of the ACM and the IEEE. |